Visual Servoing

Tutorial Level: Intermediate

Many applications require aligning the arm end effector with respect to some (possibly moving) visual reference. Visual Servoing is a technique commonly used in these cases.

This tutorial will walk through two example Objectives that will teach you how to:

- Define a desired relative positioning between the robot end effector and a visual target (an AprilTag).

- Move the robot end effector in closed loop towards the desired alignment reference.

Setup

For this example, we will use a Universal Robots UR5e simulated in Gazebo. To launch this configuration, run:

moveit_pro run -c picknik_ur_gazebo_config

Teach the desired end effector pose relative to the target

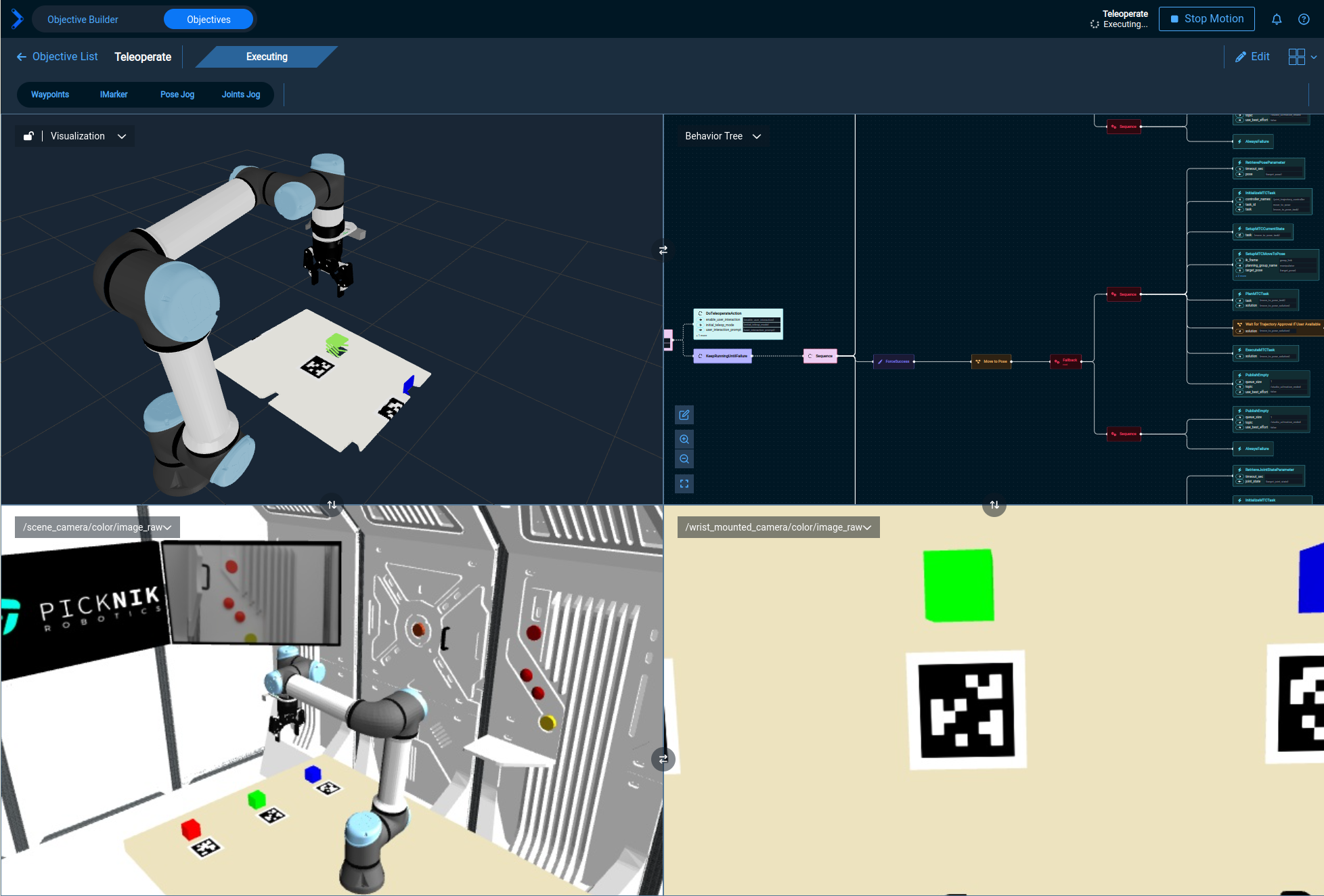

Start by teleoperating the arm to a desired pose relative to an AprilTag, using the Teleoperate Objective.

You can select the scene camera in the visualization dropdown, pick a 3 tile layout, or run the Take Snapshot Objective to visualize the AprilTags.

An AprilTag needs to be visible, and you will need to know the tag ID, which will be used in the next Objectives.

In this case, this is tag ID 2.

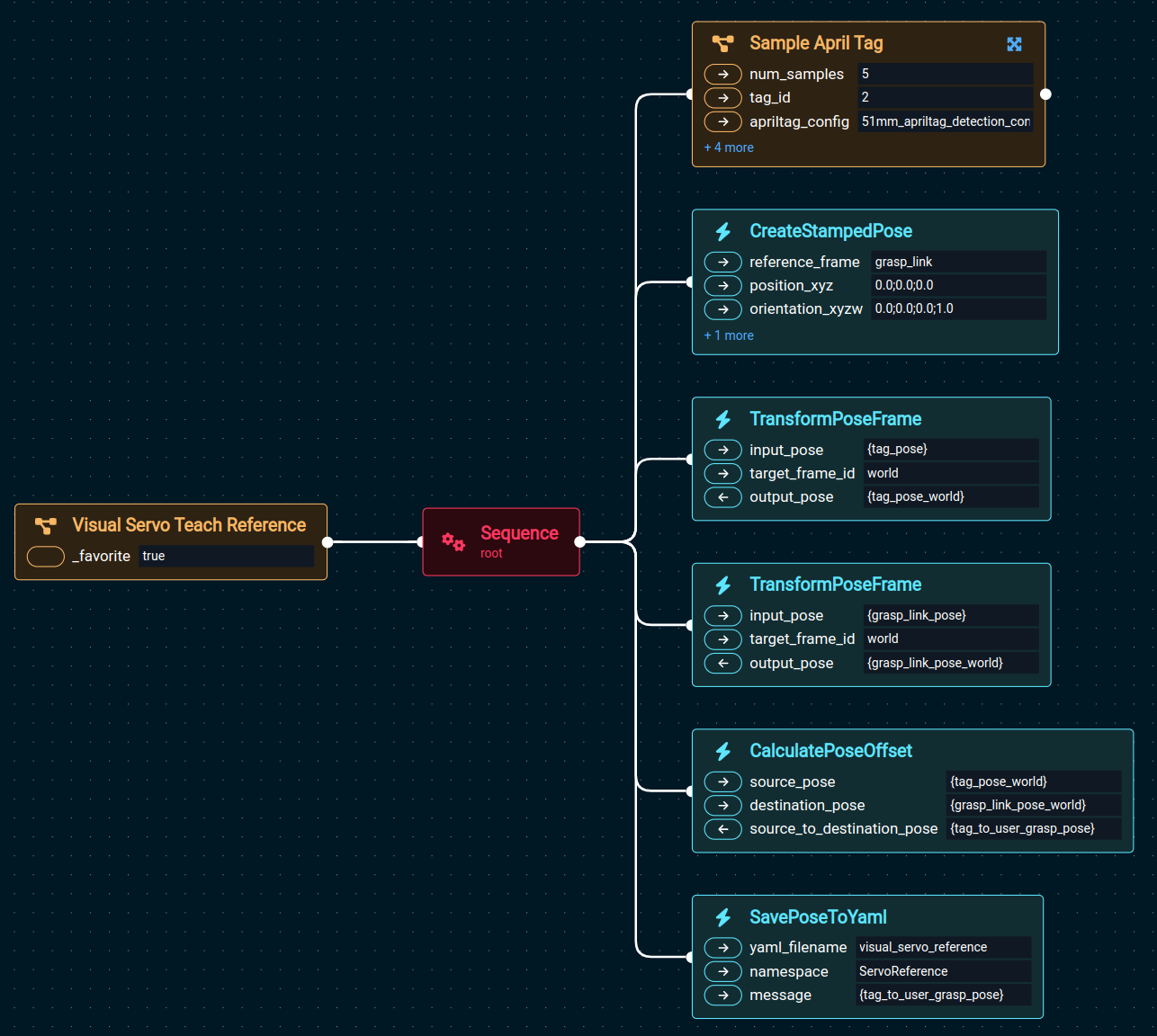

Then go to the Objectives panel and click on the Visual Servo Teach Reference Objective.

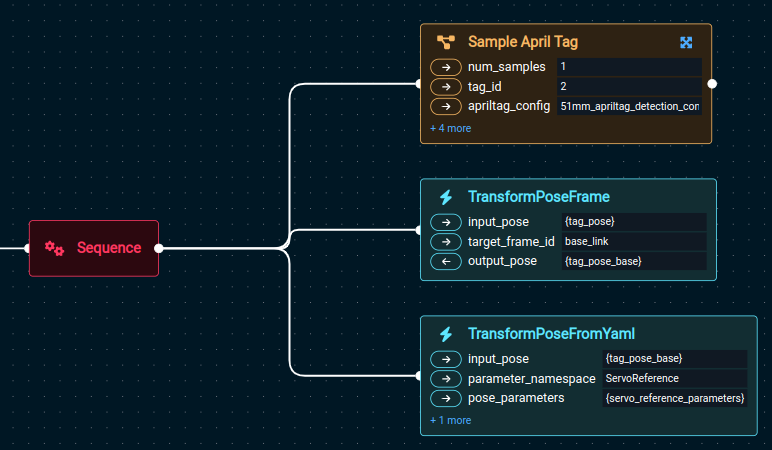

It should look something like this:

This Objective can be used to define a desired relative pose between the robot end effector and an AprilTag.

That relative pose will be stored in a YAML file in your package’s objectives directory.

Here is what each Behavior does, inside that Objective:

Sample April Tag: Detects an AprilTag with the giventag_idand tag parameters from a YAML file (size, etc.).num_samplesis the number of detection’s that will be taken and averaged.CreateStampedPose: Gets the currentgrasp_linkpose, i.e., the tip of the manipulator.TransformPoseFrame(first instance): Transforms the tag pose to theworldframe.TransformPoseFrame(second instance): Transforms thegrasp_linkpose to theworldframe.CalculatePoseOffset: Computes and returns the relative pose betweentagandgrasp_link.SavePoseToYaml: Stores the relative pose in a YAML file in the Objectives directory, to be used later.

Run the Visual Servo To Reference Objective

Now go back to the Teleoperate Objective and move the robot away from the reference pose you just stored.

Make sure the target AprilTag is still visible in the image.

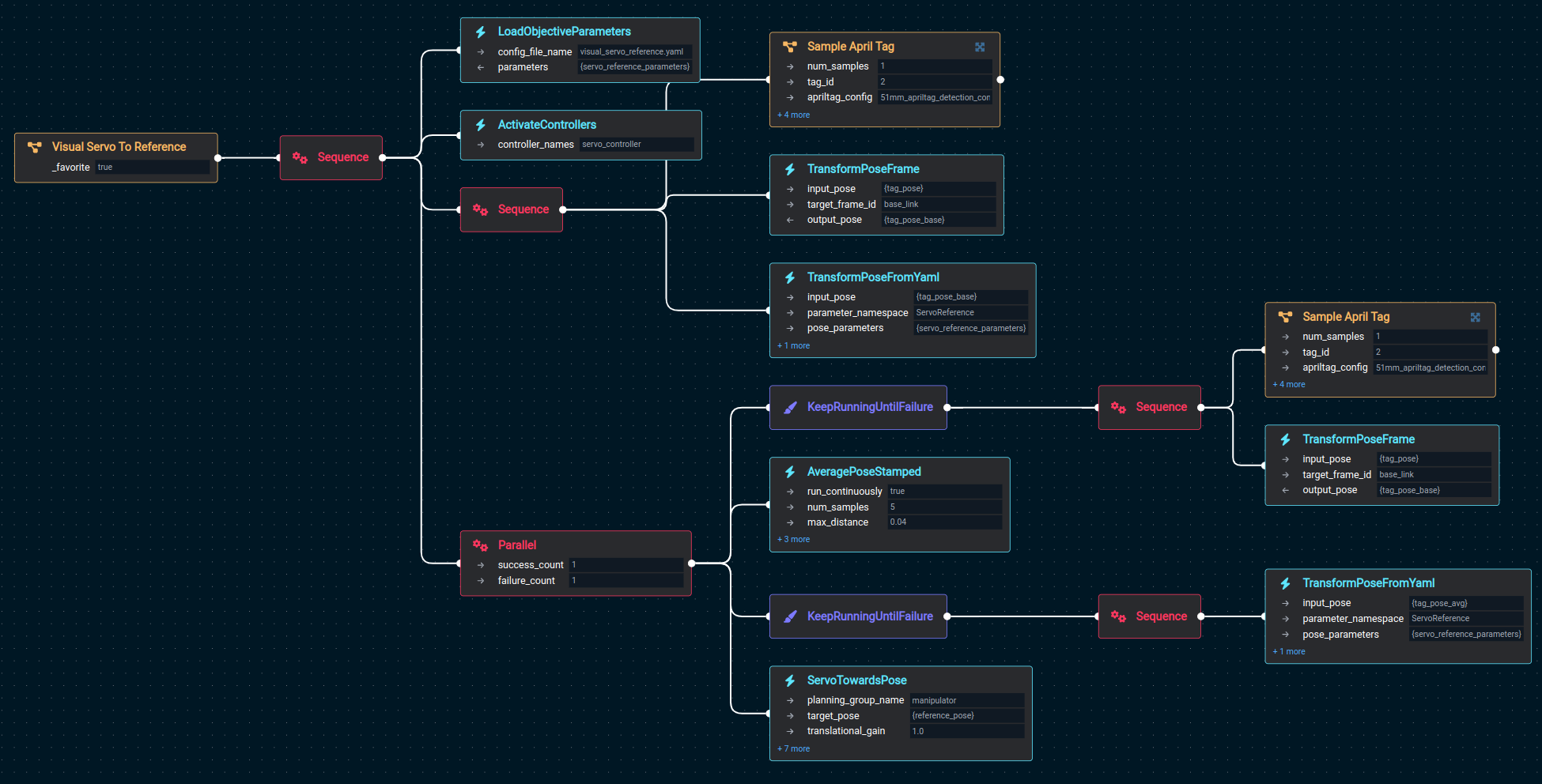

Then, in the Objectives panel, click on Visual Servo To Reference.

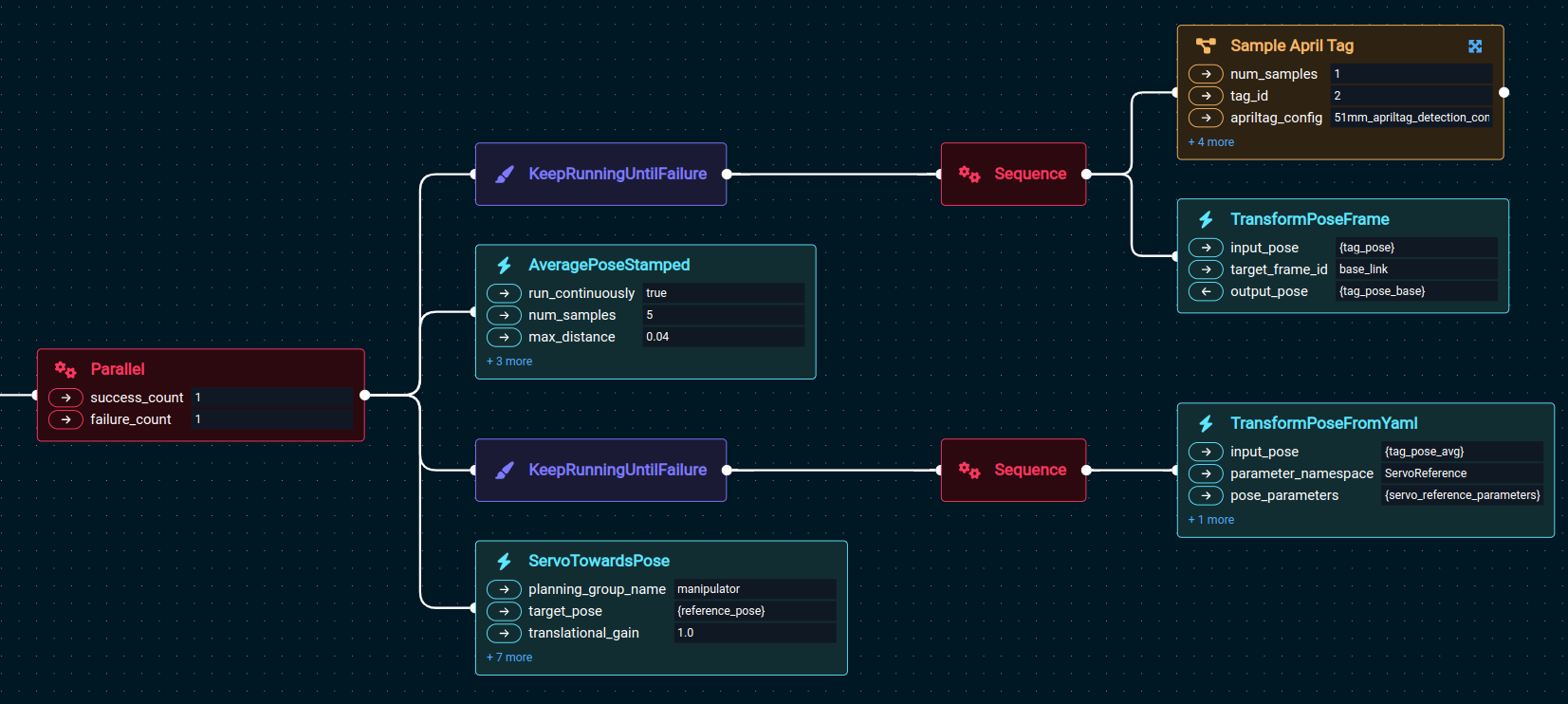

You should see an Objective like this:

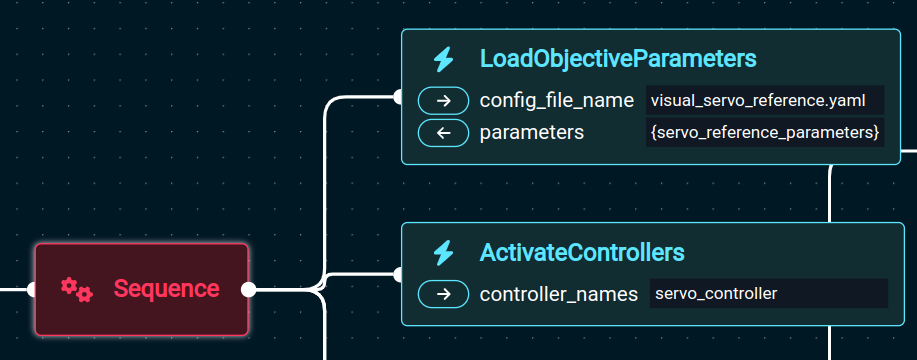

Let’s go in sections. The first two Behaviors load configuration settings from a yaml file and prepare everything for the remaining Behaviors in this Objective to run:

LoadObjectiveParameters: Loads the file that we created with the previous Objective, containing the target offset pose relative to the AprilTag.ActivateControllers: Activates the Servo controller, required for theServoBehavior to work.

The next sequence of three Behaviors makes sure there is at least one AprilTag detection available before starting the servoing Behavior.

Sample April Tag: Detects an AprilTag with the giventag_idand tag parameters in a YAML file (size, etc.).TransformPoseFrame: Transforms the tag pose to theworldframe, to give the Servoing Behavior a target in a fixed frame of reference.TransformPoseFromYaml: Applies a transform to the detected AprilTag pose. This is the desired relative transform we stored previously with theVisual Servo Teach ReferenceObjective.

Finally, the last block of Behaviors under a Parallel node execute the servoing motion.

Keep Running Until Failure(first instance): This is the same sequence we used previously to detect an AprilTag and transform its pose intoworldcoordinates. It will run continuously while the AprilTag is detected in the image.AveragePoseStamped: This Behavior applies an averaging filter to the detected AprilTag poses. It will continuously compute the average of the last 10 detections.Keep Running Until Failure(second instance): Applies a transform to the detected AprilTag pose. This is the desired relative transform we stored previously with theVisual Servo Teach ReferenceObjective.ServoTowardsPose: This is the Behavior that computes and executes the motion. It will continuously move the robot tip towards the target, until the exit conditions are met (more details below).

The entire Parallel block will keep running while none of the Behaviors fail or return success.

It will stop as soon as there is at least one failure, or one Behavior succeeds.

Since KeepRunningUntilFailure and AveragePoseStamped never succeed (they run indefinitely), the Parallel block can only succeed if ServoTowardsPose returns SUCCESS.

Running the Objective will control the robot towards the relative pose you stored earlier, like this:

Below we will see the conditions under which ServoTowardsPose returns success.

ServoTowardsPose details

ServoTowardsPose moves the robot end effector towards a Cartesian target_pose.

With every tick, the error between the current tip pose (for a given planning group) and target_pose is computed.

That error is also the direction along which the robot tip has to move to decrease the distance to the target.

Therefore, with every tick, this Behavior will send a Cartesian velocity to MoveIt Pro Servo, to move along the direction that points towards the target.

The Cartesian velocity will be proportional (with user-defined gains) to the distance to the target, but capped at the given velocity limits specified in the Behaviors Ports.

Cartesian velocities are published to Servo at a periodic rate, as geometry_mgs::msg::TwistStamped messages.

The Behavior will run indefinitely until cancelled or until the distance to the target falls below the specified thresholds for an amount of time.

More specifically, the Behavior defines the following ports:

planning_group_name: Name of the planning group to control.target_pose: Ageometry_msgs::msg::PoseStampedmessage with the target pose. Can be given in any known frame.translational_gain: Multiplier for the translational part of the pose error. Use a value of 0 to disable translations.rotational_gain: Multiplier for the rotational part of the pose error. Use a value of 0 to disable rotations.max_translational_vel: Maximum Cartesian-space translational velocity in m/s.max_rotational_vel: Maximum Cartesian-space rotational velocity in rad/s.exit_threshold_translation: The translation error must be below this value for the Behavior to finish.exit_threshold_rotation: The rotation error must be below this value for the Behavior to finish.exit_threshold_time: Translation and rotation errors must be below their thresholds for this amount of time (in seconds) for the Behavior to finish. Use a value of 0 to disable.publish_rate: The rate (times per second) at which to publish Twist messages to Servo.

The Behavior will return successfully if the translation and rotation errors are below exit_threshold_translation and exit_threshold_rotation continuously for at least exit_threshold_time seconds.