Create a Simple Visual Servoing Objective

Tutorial Level: Advanced

In this tutorial you will learn how to make an Objective to track an AprilTag fiducial marker mounted on an object of interest, using the robot’s end effector.

Prerequisites for completing this tutorial:

- Familiarity building and running Objectives through the MoveIt Studio user interface, see the Getting Started tutorials.

- A calibrated camera publishing synchronized CameraInfo and Image messages to ROS 2 topics. The camera frame should be included in the robot’s URDF for retrieving frame transforms from TF2.

- A configuration for Cartesian endpoint jogging of your arm using MoveIt Servo. This is verifiable through the Endpoint tab in the MoveIt Studio user interface.

- Have an AprilTag Fiducial Marker.

We generally recommend markers from the

36h11family to start, though the detection node will work with any tag from the standard library.

Overview

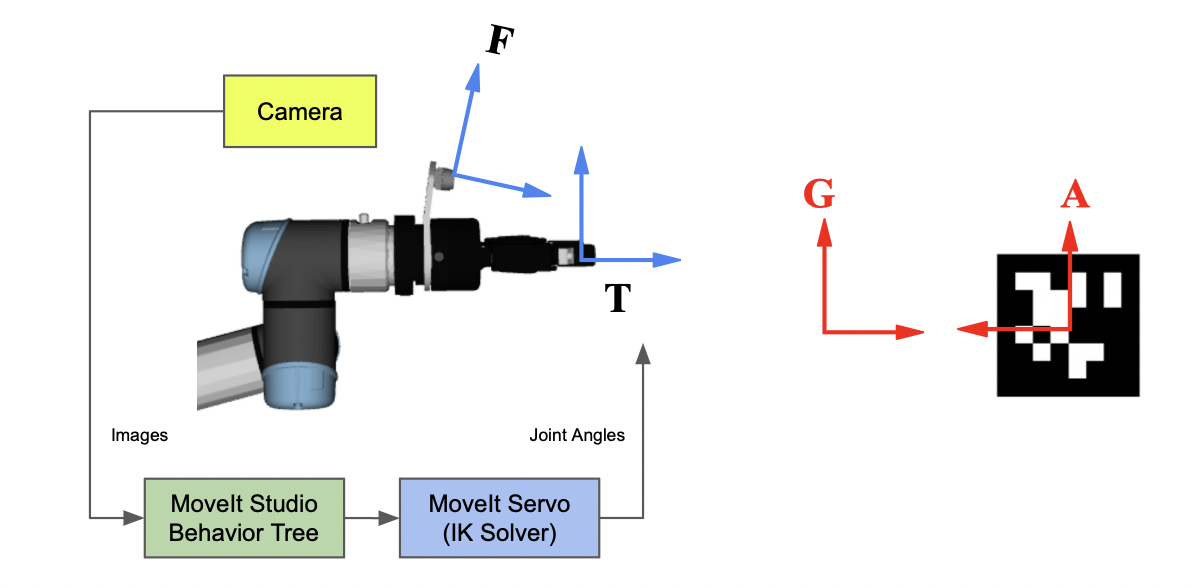

Visual servoing is a technique for using camera feedback to control the motion of a robot. This tutorial provides steps for building an Objective that identifies the pose of an AprilTag in a camera feed, determines the desired grasp point relative to the detected tag, filters the results, and then computes and publishes Cartesian twists to drive the tool towards the goal pose.

The default configuration assumes a wrist mounted camera and AprilTag to do localization of a grasp point. There are four frames that are relevant to understanding this configuration,

FThe camera frame. Used for localization of the AprilTag.TThe tool frame, in the case of MoveIt Studio we generally refer to this as themanual_grasp_link.AThe detected AprilTag frame. Determined by image feedback and fromF.GThe goal frame, or the desired pose for the end effector. For now we will hard-code this as a transform relativeA.

Adding Required Behaviors

There are several Behaviors specific to this Objective that are not included in MoveIt Studio’s core Behavior loader.

To ensure MoveIt Studio has access to the required Behaviors, modify the robot’s configuration package to ensure the following are in the list behavior_loader_plugins:

behavior_loader_plugins:

# Plugin for core behaviors provided with MoveIt Studio

core:

- "moveit_studio::behaviors::CoreBehaviorsLoader"

# Plugin for prototyped Behaviors to run Visual Servoing objectives

visual_servo_behaviors:

- "moveit_visual_servo::behaviors::VisualServoBehaviorLoader"

Building and Running the Objective

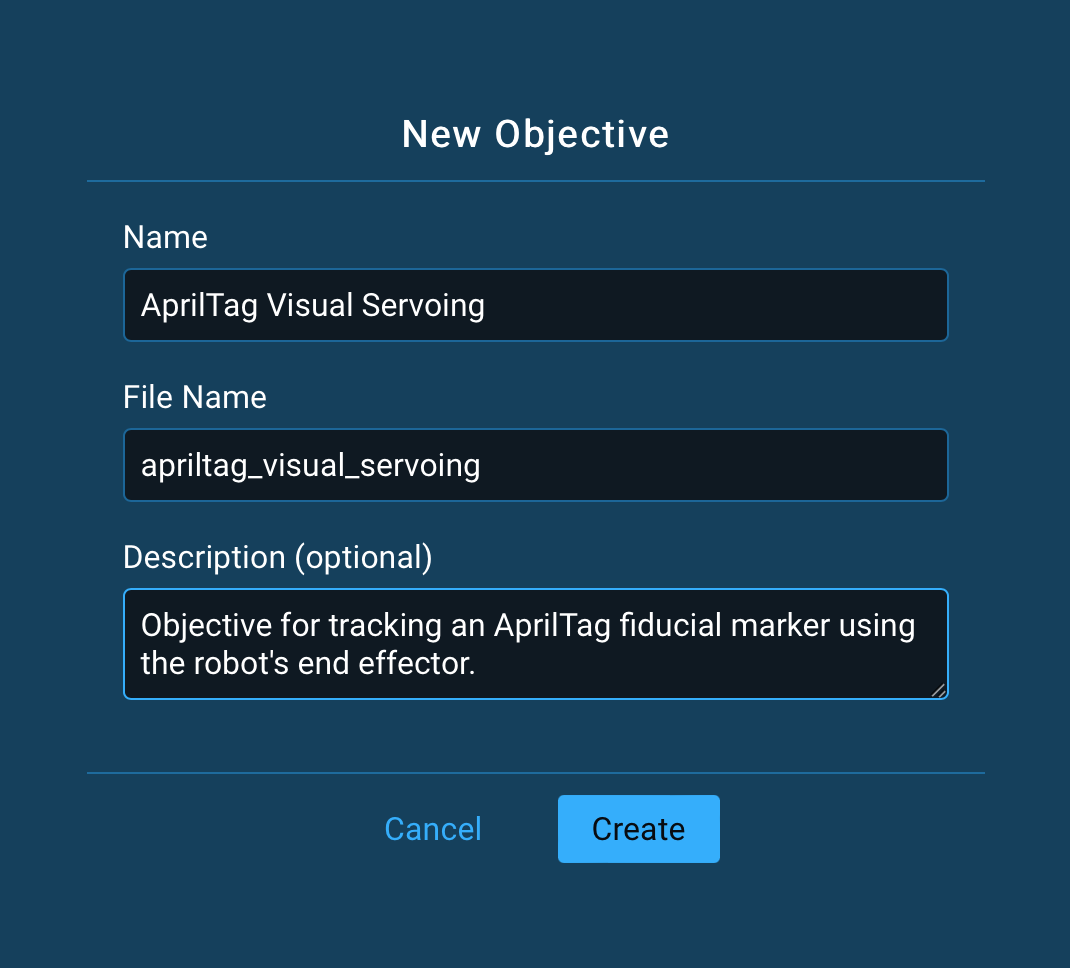

With the Behaviors now added to the configuration package, you are ready to build and configure the Objective through the MoveIt Studio user interface! Launch MoveIt Studio, open the Objective Builder, and create a new Objective:

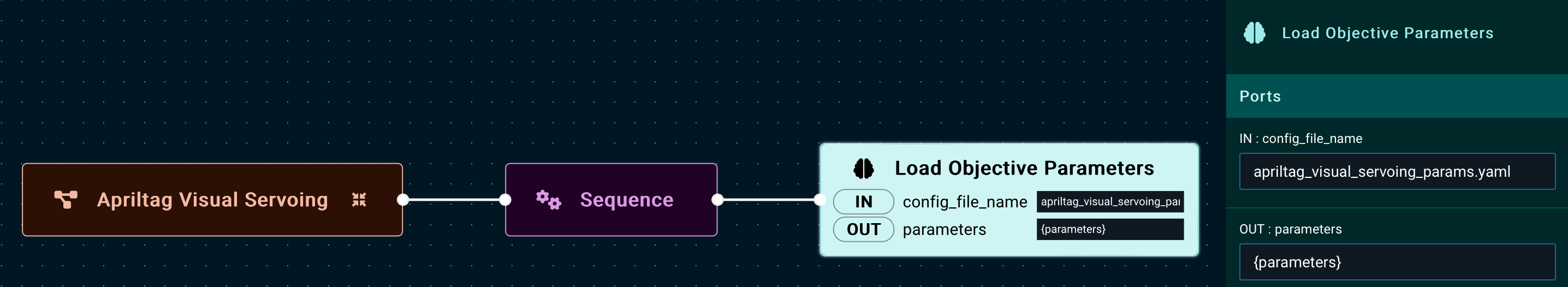

The first Behavior added to the Objective is to load the Objectives parameters file.

Add a LoadObjectiveParameters node and set the config_file_name to apriltag_visual_servoing_params.yaml.

Leave the parameters output port as {parameters}.

For more information, refer to Parameterizing Behaviors using Configuration Parameters.

This blank YAML file will be automatically created at $HOME/.config/moveit_studio/${CONFIG_PACKAGE_NAME}/objectives/apriltag_visual_servoing_params.yaml.

Using a text editor, copy the contents below into your YAML file.

# These are default for the AprilTag detection node. These can be overridden depending on your

# chosen tag's family and parameters. For more information, refer to

# https://github.com/christianrauch/apriltag_ros#configuration

GetApriltagPose:

apriltag_family_name: 36h11

# Note that more than one tag can be detected per image.

apriltag_ids:

- 0

apriltag_sizes:

- 0.028

apriltag_names:

- frame_id0

max_hamming: 0

nthreads: 1

quad_decimate: 1

quad_sigma: 0

refine_edges: true

z_up: true

# Parameters for the visual servo detection node.

VisualServo:

# Values to clamp commanded angular and linear velocities

max_angular_output: 1

max_linear_output: 1

# Linear and angular gains on the Cartesian error between the start and target pose

# We start these really low and go up!

proportional_gain_angular: 1

proportional_gain_linear: 1

# The rate to publish twist commands

servo_spin_rate: 0.02

# The desired tool frame to align with the goal pose, must match MoveIt Servo's tool frame.

target_servo_frame: 'manual_grasp_link'

# The fixed world frame to do velocity computations in

fixed_servo_frame: 'base_link'

# Whether or not to include a velocity feed-forward term.

# In general you do not want these.

include_ff: false

ff_velocity_gain_angular: 0

ff_velocity_gain_linear: 0

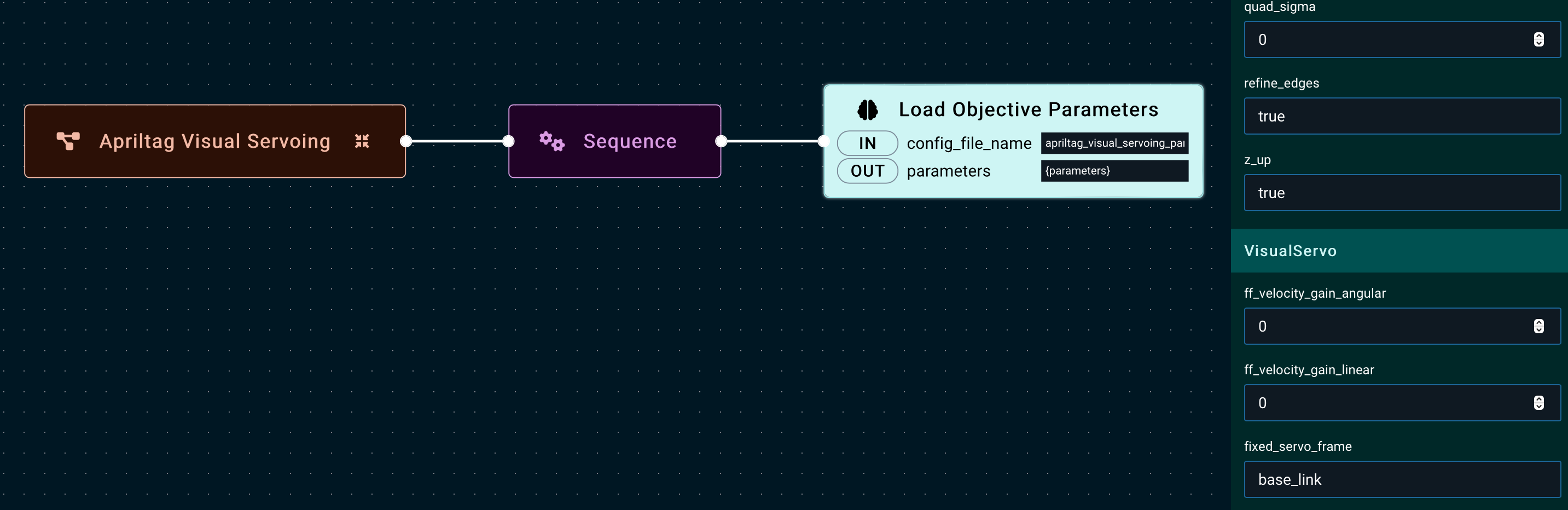

With that in place, return to the Objective Builder.

Now, when selecting the LoadObjectiveParameters node, the YAML parameters from the file should be configurable in the sidebar (as seen in the image below).

For now, configure the parameters under StreamApriltagPose to match whatever tag you have selected for this tutorial.

In particular you will need the apriltag_family_name, apriltag_ids, and apriltag_sizes parameters.

All the other parameters can remain with the default values.

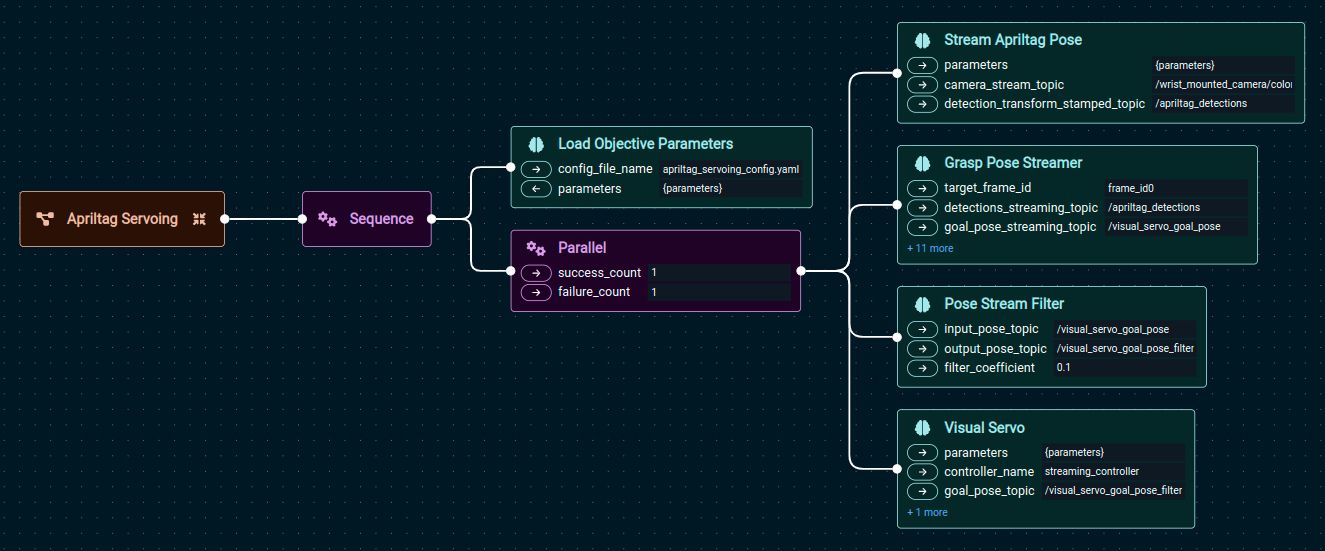

With the parameters in place, use a Parallel node to execute all remaining nodes simultaneously.

Add the GetApriltagPose, GraspPoseStreamer, PoseStreamFilter, and VisualServo nodes all as children of the Parallel node, in order.

The structure of the tree should be the following:

Here is a run-down of the configurable parameters for each node:

ParallelRefer to the node description for more information.

All children are executed concurrently in the same thread. In this Objective, if a single node returns success we wish to report success, as it will indicate that the end effector has arrived at the goal pose.

GetApriltagPoseConfiguration for the detection logic in this node is done through YAML parameters.

The

camera_stream_topicparameter specifies the image topic that will be be subscribed to and processed by the AprilTag detector. Thedetection_transform_stamped_topicspecifies the topic name to publish tag detections. This will be a list of stamped transforms from frameFto frameA, given in the camera’s frameF, as defined above.GraspPoseStreamerAs mentioned previously, this node determines the target grasp pose,

G, for the tool based on a given tag detection. As of now,Gis a hard-coded pose defined relative the AprilTag’s frame,A, given in frameA. Many of the defaults here should be sufficient for a basic use case. The default goal pose is set to a 10cm offset from the AprilTag, but this can be modified by changing thegoal_pose_translation_*andgoal_pose_orientation_*values accordingly. The*_thresholdparameters define the success threshold for the linear and angular distances between the current tool pose and the goal pose, or|G - T|.PoseStreamFilterApplies a simple smoothing filter to the specified incoming pose topic, and publishes to the specified output topic. The

filter_coefficientis configurable, though in general some smoothing is recommended.VisualServoActivates and publishes twist commands to MoveIt Servo to drive the tool,

T, to the goal pose,G. Most parameters for the servo node itself are configured in the objective’s YAML file,apriltag_visual_servoing_params.yaml. You must set thecontroller_nameto the same controller used in your MoveIt Servo configuration (and so should match theTeleoperateobjective). Thegoal_pose_topicshould match the output topic from the filter node. Thepose_error_topicpublishes the current measured error between the current and target poses for the tool tip, namely|G - T|. It is subscribed to by theGraspPoseStreamerand used to measure success.

To summarize the data flow between these nodes:

The Objective is now ready to be run! Fix (or hold) an AprilTag in the camera frame and watch the arm try to chase it.

Note

By default, the proportional gains in the Visual Servo node are small.

As you become more familiar with your MoveIt Servo configuration and the tunable parameters in this Objective,

we recommend increasing the proportional_gain_angular and proportional_gain_linear parameters to get faster response from the Behavior.

Note

Once you have your Objective running with well-tuned parameters, remember to copy it into your configuration package to save it permanently!

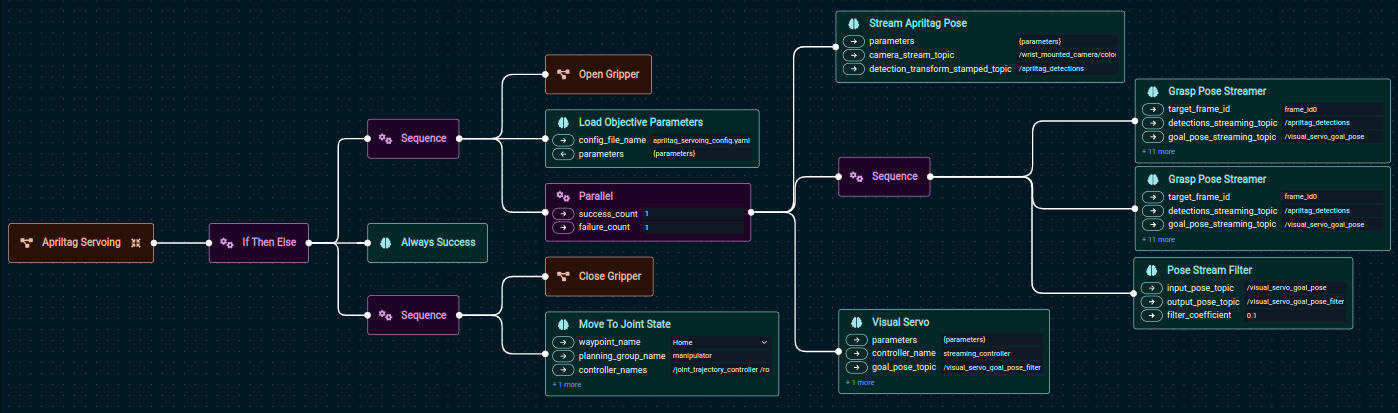

Expanding the Objective’s Capabilities

With the basic Objective in place, there are many ways to expand this functionality!

For this demonstration in the PickNik office, we configured the left arm to translate with the AprilTag and fixture, with the right arm running the Visual Servoing objective. We have set the grasp pose relative the detected tag so that the tool aligns with the handle.

This Objective includes additional Behaviors for opening and closing the gripper around the target handle,

as well as an additional GraspPoseStreamer node to support alignment with the target before “plunging” towards it.

We also have built out error handling, and additional safety logic around the objective as fallbacks for controller or other errors.

The full Behavior tree for this setup is given below,

We hope you enjoy experimenting with this Objective and Behaviors!