Pick and Place Using MoveIt Task Constructor

Pick and place tasks describe a broad range of robotic applications like harvesting fruits, sorting items on a conveyor belt, or gearbox assembly. At its core, all these tasks require the robot to pick up an item and place it at some location. Excluding grasp pose synthesis and place pose identification, the pick and place motion can be divided roughly into these sequential stages:

- Move to Grasp Pose

- Pick object and retreat

- Move to Place pose

- Place and retreat

This how-to guide will show you how to implement a pick and place objective in MoveIt Pro using behavior trees and MoveIt Task Constructor (MTC).

This tutorial assumes you are familiar with MTC. If not, learn about the basics in this MoveIt2 tutorial.

Setup

-

If desired, you can change your user workspace using:

moveit_pro configure -

Add the moveit_studio_example_behaviors package to your user workspace:

git clone [email protected]:PickNikRobotics/moveit_studio_example_behaviors.git -

Build the package using (if you have not built a Docker overlay of your user workspace yet it will be built first):

moveit_pro build user_workspace --colcon-args="--packages-select moveit_studio_example_behaviors" -

To launch the configuration used in this example, run:

moveit_pro run -c moveit_studio_example_behaviors -

Launch the Pick And Place Example Objective

Building a Pick and Place Behavior Tree

To orchestrate the pick and place task, a complex behavior tree is needed that plans and executes the pick and place motions of the arm.

Let's break this down into the individual pieces:

First, we make sure the gripper is open. We use a named subtree to clarify the intent of the MoveGripperAction Behavior:

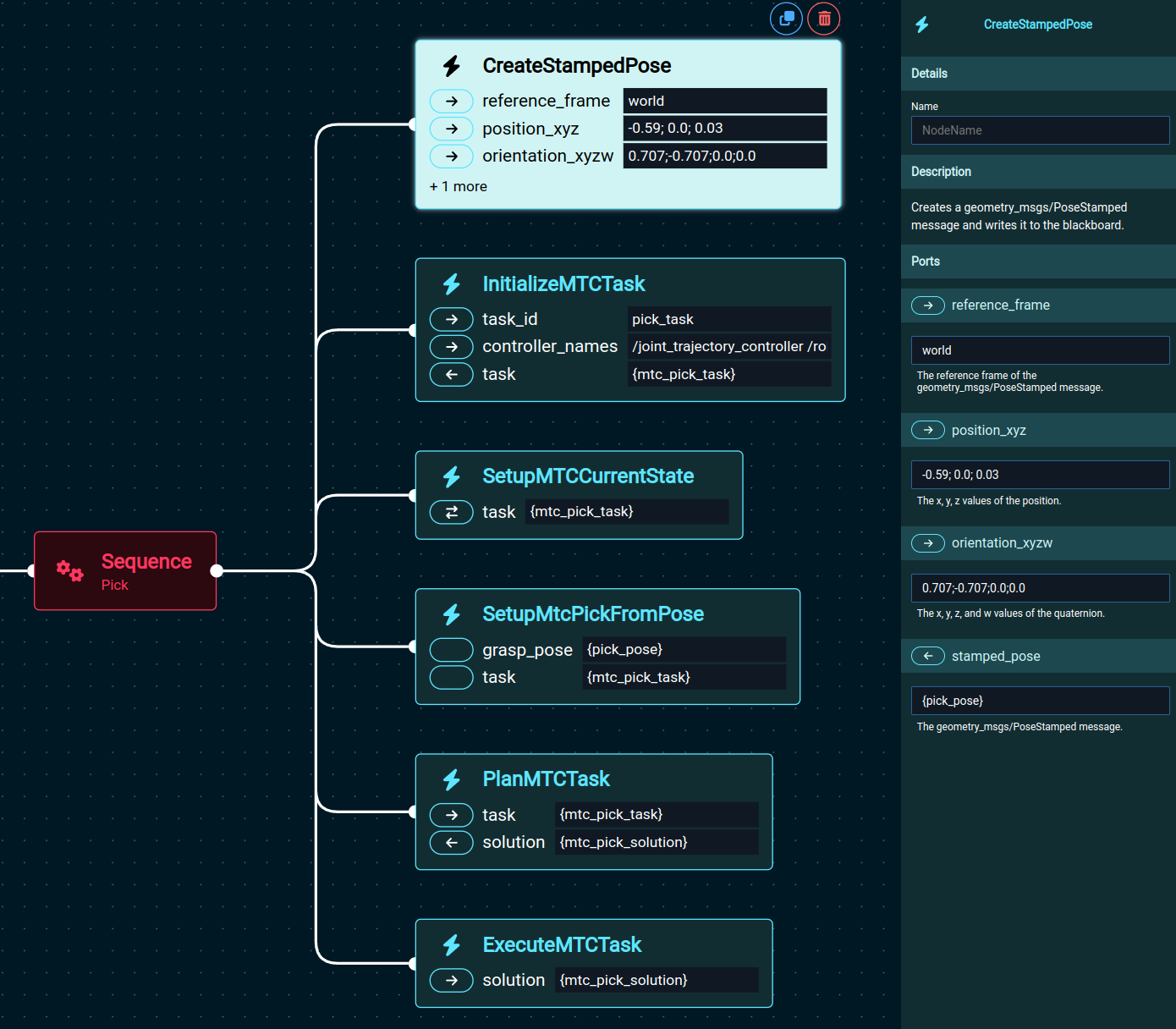

Second, we create a sequence to plan and execute the picking motion:

CreateStampedPose uses the stamped_pose output port to place the pose defined by the input ports (reference_frame, position_xyz, orientation_xyzw) on the blackboard with the name pick_pose.

For this example, the pose is defined in the behavior tree, but in more advanced applications a subtree that determines the pick pose from camera data would be used.

MoveIt Pro introduces a simplified way to create, plan, and execute MTC tasks. Rather than having to write the whole task yourself, you can use Behaviors to set up and extend the task with common building blocks, and focus on the parts that are unique to your application. To build MTC tasks, you can choose from a variety of building blocks. This also makes reusing components of existing tasks much easier. In the pick sequence, we use the following building blocks:

- InitializeMTCTask creates a task object and initializes the common global properties like trajectory execution info. The task object is then stored to the blackboard to be modified by following behaviors.

- SetupMTCCurrentState takes the created task and sets up a generator stage corresponding to the current state as the task's start state.

- SetupMtcPickFromPose is our custom Behavior which adds the stages required to describe the pick planning problem to the task. We will discuss the details of this stage in the next section.

- PlanMTCTask calls the

plan()function of the given MTC task and stores the solution to the blackboard. - ExecuteMTCTask reads a MTC solution from the blackboard and executes it with the move_group.

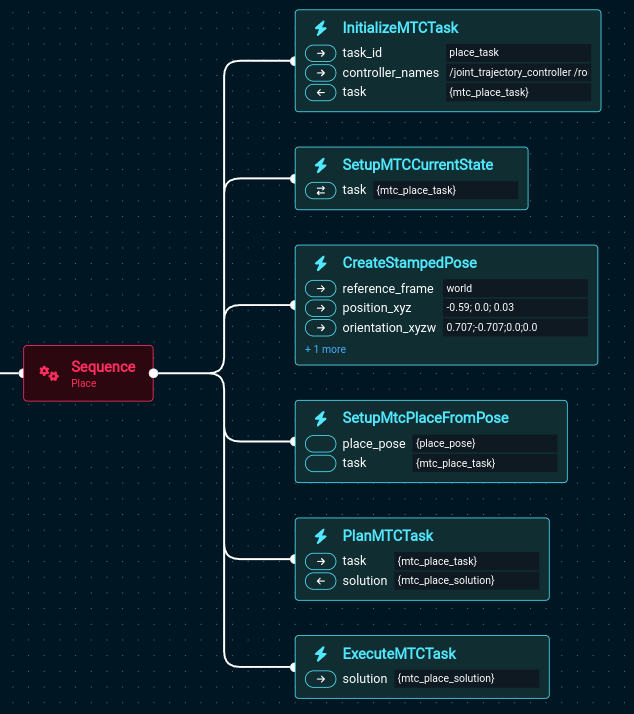

The place sequence is very similar to the pick sequence:

- InitializeMTCTask creates and initializes a task object and stores it to the blackboard.

- SetupMTCCurrentState takes the created task and sets up a generator stage corresponding to the current state as the task's start state.

- CreateStampedPose is used to generate the desired place pose and store it in the blackboard.

- SetupMtcPlaceFromPose is our custom Behavior which adds the stages required to describe the place planning problem to the task.

- PlanMTCTask calls the

plan()function of the given MTC task and stores the solution to the blackboard. - ExecuteMTCTask reads an MTC solution from the blackboard and executes it.

Any sequence of Behaviors can be converted to a subtree for use in other Objectives. You can do this by clicking on a Sequence block and selecting Convert to Subtree.

Building Pick Objective with MTC

This section will walk you through the code in the SetupMtcPickFromPose Behavior, which adds the stages that are necessary to plan a pick motion to the MTC task. You find the complete source code of this stage.

While we go sequentially through the code, the stages of an MTC task are executed in parallel during planning. Generator stages (e.g., grasp pose generation) create solution candidates and propagate them to its neighboring stages. An MTC task is successfully planned when a continuous sequence of motions is found.

The first stage to add is a Connect stage that computes a trajectory from the current state to the pre-grasp pose.

/** Move To Pre-Grasp Pose **/

auto stage = std::make_unique<moveit::task_constructor::stages::Connect>(

"Move to Pre-Approach Pose",

moveit::task_constructor::stages::Connect::GroupPlannerVector{ { kArmGroupName, mtc_pipeline_planner } });

stage->properties().configureInitFrom(moveit::task_constructor::Stage::PARENT,

{ kPropertyNameTrajectoryExecutionInfo });

stage->setTimeout(1.0);

container->add(std::move(stage));

In many cases, it makes sense to allow certain collisions during a motion to increase the likelihood of finding valid solutions. Therefore, for the approach and grasp motions, collisions between the grasp object and the robot hand are allowed. We do this by adding a ModifyPlanningScene stage:

auto stage =

std::make_unique<moveit::task_constructor::stages::ModifyPlanningScene>("Allow collision 1 (hand,object)");

stage->allowCollisions(kSceneObjectNameOctomap,

task.value()

->getRobotModel()

->getJointModelGroup(kEndEffectorGroupName)

->getLinkModelNamesWithCollisionGeometry(),

true);

container->add(std::move(stage));

The next stage computes the approach motion. To understand what is happening here, it is necessary to think backwards: Given an IK solution from the following pose generation stage, this stage tries to compute a motion for the robot that is a straight line path of 10 cm in the z-direction (lift the grasped object up). The start of the computed path is the pre-grasp pose, and the pose is determined by this stage based on the grasp pose generated in the GeneratePose stage.

auto stage = std::make_unique<moveit::task_constructor::stages::MoveRelative>("Approach", mtc_cartesian_planner);

stage->properties().configureInitFrom(moveit::task_constructor::Stage::PARENT,

{ kPropertyNameTrajectoryExecutionInfo });

stage->restrictDirection(moveit::task_constructor::stages::MoveRelative::BACKWARD);

stage->setGroup(kArmGroupName);

stage->setIKFrame(kHandFrameName);

geometry_msgs::msg::Vector3Stamped approach_vector_msg;

tf2::toMsg(approach_vector, approach_vector_msg.vector);

approach_vector_msg.header.frame_id = kHandFrameName;

stage->setDirection(approach_vector_msg);

stage->setTimeout(10);

container->add(std::move(stage));

For the grasp pose generation, collisions between the hand and the grasp object are disallowed because the grasp pose is not supposed to be in collision with the object to grasp.

auto stage =

std::make_unique<moveit::task_constructor::stages::ModifyPlanningScene>("Prevent collision 2 (hand,object)");

stage->allowCollisions(kSceneObjectNameOctomap,

task.value()

->getRobotModel()

->getJointModelGroup(kEndEffectorGroupName)

->getLinkModelNamesWithCollisionGeometry(),

false);

container->insert(std::move(stage));

The GeneratePose pose stage generates a Cartesian pick pose and the ComputeIK stage generates IK solution candidates. In this scope, solution candidates for the whole MTC task originate and are propagated in both directions.

// Specify pose to generate for

auto stage = std::make_unique<moveit::task_constructor::stages::GeneratePose>("Generate pose");

stage->properties().configureInitFrom(moveit::task_constructor::Stage::PARENT);

stage->properties().set("marker_ns", "grasp_frame");

stage->setPose(grasp_pose.value());

stage->setMonitoredStage(task.value()->stages()->findChild("current state")); // Hook into current state

// Compute IK

auto wrapper = std::make_unique<moveit::task_constructor::stages::ComputeIK>("Pose IK", std::move(stage));

wrapper->setMaxIKSolutions(kMaxIKSolutions);

wrapper->setTimeout(kIKTimeoutSeconds);

wrapper->setIKFrame(kHandFrameName);

wrapper->properties().configureInitFrom(moveit::task_constructor::Stage::PARENT, { "eef", "group" });

wrapper->properties().configureInitFrom(moveit::task_constructor::Stage::INTERFACE, { "target_pose" });

wrapper->setIgnoreCollisions(true);

container->add(std::move(wrapper));

Collisions between the hand and the collision object need to be allowed from here until the rest of the task since because the object is grasped in the next stage and then held by the robot.

auto stage =

std::make_unique<moveit::task_constructor::stages::ModifyPlanningScene>("Allow collision 3 (hand,object)");

stage->allowCollisions(kSceneObjectNameOctomap,

task.value()

->getRobotModel()

->getJointModelGroup(kEndEffectorGroupName)

->getLinkModelNamesWithCollisionGeometry(),

true);

container->insert(std::move(stage));

This stage closes the gripper to grasp the object.

auto stage =

std::make_unique<moveit::task_constructor::stages::MoveTo>("Close hand", mtc_joint_interpolation_planner);

stage->properties().configureInitFrom(moveit::task_constructor::Stage::PARENT,

{ kPropertyNameTrajectoryExecutionInfo });

stage->setGroup(kEndEffectorGroupName);

stage->setGoal(kHandCloseName);

container->add(std::move(stage));

Next, the grasped object is lifted up. This is done by another MoveRelative stage. Similar to Approach, the post grasp configuration is defined by this stage and is the end of a straight-line path of 10cm in the z-direction upwards.

auto stage = std::make_unique<moveit::task_constructor::stages::MoveRelative>("Retreat", mtc_cartesian_planner);

stage->properties().configureInitFrom(moveit::task_constructor::Stage::PARENT,

{ kPropertyNameTrajectoryExecutionInfo });

stage->setGroup(kArmGroupName);

stage->setIKFrame(kHandFrameName);

geometry_msgs::msg::Vector3Stamped retreat_vector_msg;

tf2::toMsg(approach_vector * -1, retreat_vector_msg.vector);

retreat_vector_msg.header.frame_id = kHandFrameName;

stage->setDirection(retreat_vector_msg);

container->add(std::move(stage));

Building Place Objective with MTC

The setup_mtc_place_from_pose works pretty similar to setup_mtc_pick_from_pose. You find the complete source code of this stage here. A ModifyPlanningScene stage is used to allow collisions between the hand and the grasped object. Afterwards, a Connect stage describes the motion of the robot from the current state to the pre-drop pose. From the pre-drop pose, MoveRelative generates the motion to the place pose. GeneratePose and ComputeIK are used to create place pose candidates. Collisions between the hand and grasped object are disabled during the pose generation stages to get valid solutions. Finally, the gripper is opened and the arm retreats from the placed object.

Next Steps

Now, you know how to build a simple pick and place Objective in MoveIt Pro by combining concepts like MTC and behavior trees. You could now take a look at other tutorials that show you how to solve sub-problems, like identifying the grasp pose with an april tag or adding orientation constraints to motion plans.